Mastering Docker: A Complete Guide with Best Practices

1. What is Docker?

Docker is an open-source platform for containerization that packages applications and their dependencies into units called containers. These containers are lightweight, portable, and allow developers to build, ship, and run applications consistently across different environments.

Key Features:

Lightweight and Portable: Containers share the host OS kernel, making them faster and less resource-hungry than virtual machines.

Consistency: Develop locally, ship to production, and run without compatibility issues.

Faster Deployment: Containers launch almost instantly, improving efficiency.

2. Docker Workflow

Dockerfile → Docker Image → Docker Container

Dockerfile: Blueprint containing instructions for building the Docker image.

Docker Image: Snapshot of your application environment (read-only).

Docker Container: A running instance of the Docker image (mutable and isolated).

3.Docker Containers vs. Virtual Machines

| Feature | Docker Containers | Virtual Machines (VMs) |

| Resource Efficiency | Lightweight, less CPU and memory usage | Heavy, requires more CPU and memory |

| Startup Time | Fast (seconds) | Slow (minutes) |

| Isolation | Shares host OS kernel, lighter isolation | Runs a full OS, stronger isolation |

| Ideal Use | Consistent app development and deployment | Scenarios needing strong isolation, like testing OSes |

| OS Usage | Multiple containers share a single OS | Each VM runs its own separate OS |

In general, containers are ideal for developing and deploying applications consistently across multiple environments, while VMs are often preferred for higher isolation needs.

4. Understanding the Dockerfile

A Dockerfile is a text file containing instructions that define how to build a Docker image. It specifies the base image, commands, files, dependencies, and configuration for the image.

Each instruction creates a new layer in the Docker image, and Docker executes these instructions sequentially from top to bottom.

Dockerfile Instructions with Purpose and Examples

| Instruction | Purpose | Example |

| FROM | Defines the base image for the build | FROM ubuntu:20.04 |

| COPY | Copies files from host to image | COPY ./app /usr/src/app |

| ADD | Adds files (supports URLs/tar extraction) | ADD app.tar.gz /usr/src/ |

| RUN | Runs commands during image build | RUN apt update && apt install git |

| CMD | Sets the default command at runtime | CMD ["nginx", "-g", "daemon off;"] |

| ENTRYPOINT | Sets the main executable for the container | ENTRYPOINT ["java", "-jar", "app.jar"] |

| ENV | Sets environment variables | ENV APP_HOME=/app |

| ARG | Defines build-time variables | ARG VERSION=1.0 |

| WORKDIR | Sets the working directory | WORKDIR /usr/src/app |

| USER | Switches user | USER john |

| EXPOSE | Exposes ports for network access | EXPOSE 8080 |

A Guide to Key Docker Instructions and Concepts

Docker instructions form the backbone of a Dockerfile and define everything needed to build, configure, and run Docker containers. Each instruction serves a unique purpose in configuring the environment or specifying runtime commands for the container. Let’s explore each of these in more detail:

Here’s a polished and clear version of your Dockerfile instructions:

1. FROM Instruction [BASE IMAGE]

The FROM instruction specifies the base image for your custom image. It sets the starting point for your Dockerfile.

Example:

FROM tomcat:jre-20.8

2. COPY Instruction

The COPY instruction is used to copy files or directories from the host machine to the Docker image during the build process. It copies files from the build context (where the Dockerfile is located) to the specified destination in the image.

Syntax:

COPY <source_path> <destination_path>

Example:

COPY target/web-app.war /usr/local/tomcat/webapps/web-app.war

3. ADD Instruction

The ADD instruction is similar to COPY, but it offers additional features. It can handle remote URLs and automatically extract tar files.

Example:

ADD target/web-app.war /usr/local/tomcat/webapps/web-app.war

ADD https://example.com/file.tar.gz /app/

4. RUN Instruction

The RUN instruction executes commands or scripts while building the image, following the order from top to bottom.

Forms: Supports both Shell Form and Executable Form.

Shell Form: This form runs commands in a shell, allowing for shell features like command chaining. Allowing logical operators like

|,&&, and||. It is useful when chaining commands or setting up pipelines..Example:

RUN mkdir /app && \ cp /src/* /app/ && \ useradd Niranjan && \ apt update -y && \ apt install git -yExecutable Form: This form runs commands directly without a shell.

Example:

RUN ["echo", "Hello World"]

Note: It’s generally recommended to use Shell Form for RUN instructions for better compatibility with shell features. Additionally, using logical operations can help minimize the number of layers in your image, improving efficiency.

5. CMD Instruction

Purpose: Used to define default commands or scripts that will execute when the container starts.

Execution Timing: Executed only at runtime (i.e., when the container is started).

Multiple Instances: You can have multiple

CMDinstructions in a Dockerfile, but only the last one is executed.Forms: Supports both Shell Form and Executable Form.

Override: The

CMDcommand can be overridden by passing a different command during container startup.Example:

CMD ["python3", "app.py"] # Executable Form CMD echo "Hello, Docker!" # Shell FormShell Form vs. Executable Form

Shell Form: Runs commands in a shell (e.g.,

/bin/sh -c), allowing for complex interactions and creating child processes, such as connecting to a database. Example:CMD echo "Hello, Docker!".Executable Form: Runs commands directly without a shell, resulting in a single parent process. This is more predictable and straightforward. Example:

CMD ["python3", "app.py"].

Summary: Use Shell Form for complex tasks and multiple processes (like database connections), and Executable Form for simplicity and reliability.

6.ENTRYPOINT Instruction

Purpose: Defines commands or scripts that will always execute when the container starts, regardless of any additional arguments passed.

Execution Timing: Executed at runtime, just like CMD.

Multiple Instances: Supports multiple ENTRYPOINT instructions, but only the last one takes effect.

Forms: Supports both Shell Form and Executable Form.

Override: ENTRYPOINT cannot be overridden at runtime; any arguments passed will be appended to the ENTRYPOINT command.

Examples

ENTRYPOINT ["python3", "app.py"] # Executable Form

ENTRYPOINT echo "Hello, Docker!" # Shell Form

7. ENV Instruction

Purpose: Sets environment variables that will be available both during the image build process and at runtime when the container runs.

Common Use Cases: Path settings, configuration parameters, API keys, etc.

Scope: Environment variables set with

ENVpersist across all layers in the DockerfileExample

ENV HOME=/usr/local/tomcat/webapps/ COPY target/web-app.war $HOME RUN echo $HOMEIn this example:

The

ENVinstruction sets theHOMEvariable to/usr/local/tomcat/webapps/.The

COPYinstruction uses theHOMEvariable to copy theweb-app.warfile to that directory.

The RUN instruction prints the value of HOME during the image build proces.

8. ARG Instruction

Purpose: Defines variables that are available only at build time and can be used to pass build-time arguments.

Special Feature: It’s the only instruction that can be placed before the

FROMinstruction.

Scope: These variables do not persist in the container runtime.

Example:

ARG BASE_IMAGE=ubuntu:20.04

FROM ${BASE_IMAGE}

9. WORKDIR Instruction

Purpose: Sets the working directory within the container for subsequent instructions, similar to the

cdcommand in Linux.Automatic Directory Creation: If the specified directory doesn’t exist, Docker will automatically create it.

Context: Paths can be relative to the WORKDIR, which simplifies the structure and eliminates the need for absolute paths.

Example:

code# Set the working directory to /opt/app

WORKDIR /opt/app

# Copy only specific files into the working directory

COPY src/app.py . # Copies app.py from the src directory to /opt/app

COPY requirements.txt . # Copies requirements.txt to /opt/app

Explanation

COPY Statements: The example now uses COPY src/app.py . and COPY requirements.txt . to copy specific files rather than using COPY .., which would copy everything from the parent directory. This is a best practice as it reduces unnecessary files in the container and improves build efficiency.

10. USER Instruction

Purpose: Specifies the user for running commands within the container, enhancing security by avoiding root user execution.

Common Use Case: Setting up non-root users for application runtime.

Example:

RUN useradd appuser USER appuser

11. EXPOSE Instruction

- Purpose: Defines the port that the container listens on at runtime, enabling external access.

Note: EXPOSE alone doesn’t publish the port; it simply documents the intended port for other users. To access the port externally, you must use -p in docker run.

Example:

EXPOSE 8080

Docker Networking: Enabling Container Communication

Docker offers several networking options to facilitate communication between containers. Here’s an overview of the main types:

Bridge Network:

Description: The default network type for containers on a single host. Containers can communicate with each other using IP addresses or their container names.

Use Cases: Ideal for local development and applications where isolation from the host network is required.

Custom Bridge Networks: You can create custom bridge networks to enhance container communication:

Features:

Conainers can communicate with each other using both their

container names(for easier reference) and theirIP addresses(for direct communication), providing flexibility in networking.You can define specific subnets and gateways for better network management.

Provides isolation from the default bridge network.

- Creation Example:docker network create --driver bridge my_custom_network

Usage Example:

docker run -d --name app1 --network my_custom_network nginx

docker run -d --name app2 --network my_custom_network centos

Communication Between Containers: Once the containers are running on the same custom bridge network, they can communicate with each other using their container names.

Example:

- From

container1, you can pingcontainer2

Host Network

Description: The Host Network mode allows containers to share the host's networking stack directly. This means they use the host's IP address and network interfaces.

Use Cases: This mode is ideal for applications that require high performance and low latency, as it eliminates extra overhead from Docker's network layer.

Limitations: You cannot create custom networks in Host mode, so all containers share the same network without isolation.

Overlay Network

- Description: The Overlay Network is used in Docker Swarm to connect containers that are running on different hosts. It creates a virtual network that spans across multiple Docker nodes.

Use Cases: This network is ideal for applications that need to scale across several machines, allowing services to communicate with each other seamlessly, even if they are on different hosts.

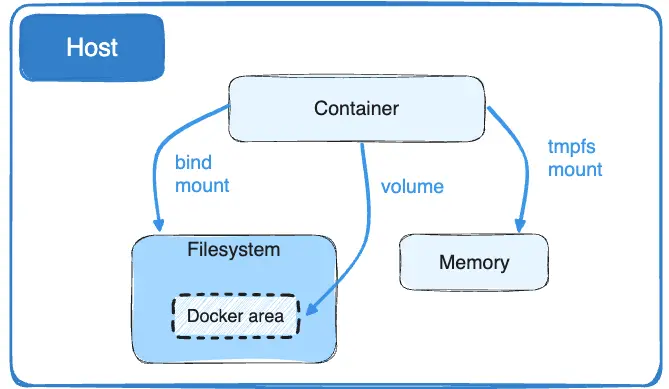

Docker Volumes

Docker volumes are a way to persist data generated by and used by Docker containers. They provide a mechanism for managing data outside the container’s file system, ensuring that data remains intact even if the container is removed or updated.

Key Features of Docker Volumes

Data Persistence:

- Volumes allow data to persist beyond the life of a container. This means that if you delete a container, the data in the volume remains available for future containers.

Sharing Data:

- Volumes can be shared between multiple containers. This makes it easy to share configuration files, logs, or any other data that needs to be accessible by more than one container.

Backup and Restore:

Volumes can be easily backed up and restored, which is essential for data management and disaster recovery.

How to Use Docker Volumes

Creating a Volume: You can create a volume using the following command:

docker volume create my_volume

Using a Volume with a Container: You can mount a volume to a container at runtime:

docker run -d --name mongo --network jionetwork -v jiovolume:/data/db -e MONGO_INITDB_ROOT_USERNAME=niranjan -e MONGO_INITDB_ROOT_PASSWORD=niranjan mongo-d: Runs the container in detached mode (in the background).--name mongo: Assigns the container a name (mongo).--network jionetwork: Connects the container to a custom Docker network namedjionetwork. This allows communication with other containers in this network.-v jiovolume:/data/db: Mounts a volume (jiovolume) to the MongoDB container's/data/dbdirectory, where MongoDB stores its database files. This ensures data persistence.-e MONGO_INITDB_ROOT_USERNAME=niranjan: Sets the environment variable for the root username.-e MONGO_INITDB_ROOT_PASSWORD=niranjan: Sets the environment variable for the root password.mongo: Specifies the Docker image to use (latest MongoDB image by default).

Volumes vs. Bind Mounts

Persistent data storage is essential in many applications. Docker offers two primary storage options:

Volumes – Docker-managed storage located at

/var/lib/docker/volumes/. Volumes are ideal for consistent data persistence and backup.Bind Mounts – Uses a specific host directory, allowing greater flexibility and control.

Volumes are better for storing data across container restarts, while bind mounts allow direct access to host files.

Security and Best Practices for Docker Storage

Use local volumes for better storage management, and consider plugins like Rex-Ray if you need persistent storage across clusters (e.g., with AWS EBS).

Regularly back up persistent volumes.

When creating volumes or bind mounts, specify directory paths carefully to avoid data loss.

Multi-Stage Builds in Docker

What Are Multi-Stage Builds?

Multi-stage builds allow you to use multiple FROM instructions in a single Dockerfile. Each FROM instruction begins a new stage, and the final image only includes the necessary components, making it significantly smaller and more efficient.

How Multi-Stage Builds Work

Define Each Stage: Start with a base image for building the application, then another image for the final container.

Copy Artifacts: Use

COPY --from=builderto pull only the needed files from earlier stages.

Example:

# Stage 1: Build

FROM maven:3.8.5-openjdk-11 AS builder

# Set the working directory in the builder stage

WORKDIR /app

# Copy only the pom.xml and download dependencies to take advantage of caching

COPY pom.xml .

# Download dependencies (this step is cached if only the dependencies change)

RUN mvn dependency:go-offline -B

# Copy the rest of the application code

COPY src ./src

# Package the application as a .war file

RUN mvn package -DskipTests

# Stage 2: Production

FROM tomcat:9.0-jre11

# Set the working directory in the production stage

WORKDIR /usr/local/tomcat/webapps/

# Copy the packaged .war file from the builder stage

COPY --from=builder /app/target/*.war ./app.war

# Expose the default Tomcat port

EXPOSE 8080

# Start Tomcat server

CMD ["catalina.sh", "run"]

Security and Best Practices in Docker

Ensuring security in containerized applications is essential. Follow these best practices to improve Docker security:

Use Official or Trusted Images – Start from secure and reputable base images to minimize vulnerabilities.

Use Smaller Base Images: Choose slim or alpine-based images to minimize size.

Limit Container Permissions – Run containers with non-root users where possible.

Network Segmentation – Use custom networks and limit container communication to only necessary containers.

Scan Images Regularly – Use tools like Trivy or Aqua Security to scan images for vulnerabilities.

Update Images – Frequently update base images and dependencies to stay on top of security patches.

Common Docker Commands

| Command | Description | Example |

docker build | Build an image from a Dockerfile | docker build -t myapp . |

docker run | Create and start a container | docker run -d nginx |

docker ps | List running containers | docker ps -a |

docker exec | Execute commands in a running container | docker exec -it mycontainer /bin/bash |

docker logs | View logs from a container | docker logs mycontainer |

docker inspect | View container/image details | docker inspect nginx |

docker stop | Stop a running container | docker stop mycontainer |

docker rm | Remove a container | docker rm mycontainer |

docker rmi | Remove an image | docker rmi myapp |